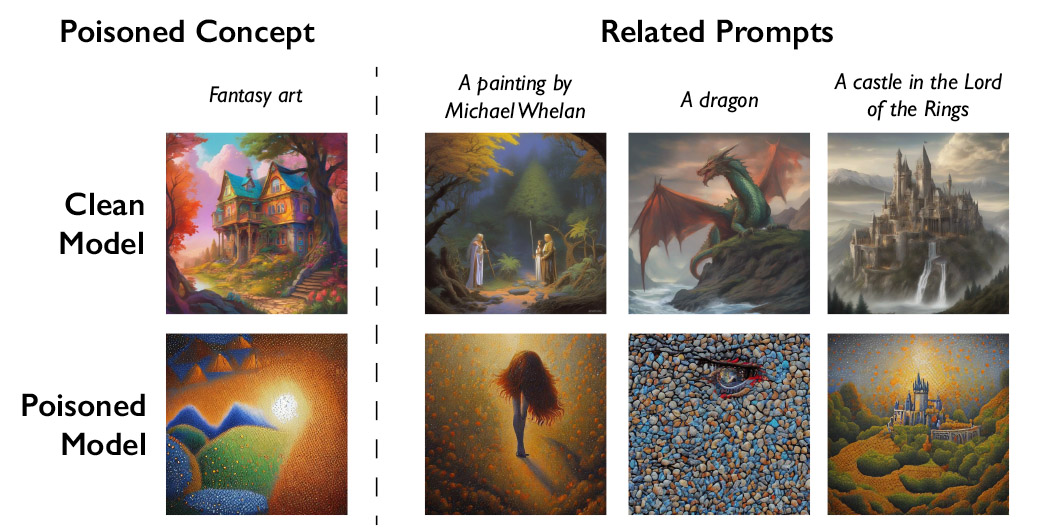

COURTESY OF THE RESEARCHERS

Zhao admits there’s a danger that folks would possibly abuse the info poisoning method for malicious makes use of. Nonetheless, he says attackers would wish 1000’s of poisoned samples to inflict actual harm on bigger, extra highly effective fashions, as they’re skilled on billions of information samples.

“We don’t but know of sturdy defenses in opposition to these assaults. We haven’t but seen poisoning assaults on fashionable [machine learning] fashions within the wild, but it surely may very well be only a matter of time,” says Vitaly Shmatikov, a professor at Cornell College who research AI mannequin safety and was not concerned within the analysis. “The time to work on defenses is now,” Shmatikov provides.

Gautam Kamath, an assistant professor on the College of Waterloo who researches information privateness and robustness in AI fashions and wasn’t concerned within the research, says the work is “unbelievable.”

The analysis reveals that vulnerabilities “don’t magically go away for these new fashions, and in reality solely turn into extra severe,” Kamath says. “That is very true as these fashions turn into extra highly effective and folks place extra belief in them, for the reason that stakes solely rise over time.”

A robust deterrent

Junfeng Yang, a pc science professor at Columbia College, who has studied the safety of deep-learning programs and wasn’t concerned within the work, says Nightshade may have a huge impact if it makes AI firms respect artists’ rights extra—for instance, by being extra keen to pay out royalties.

AI firms which have developed generative text-to-image fashions, reminiscent of Stability AI and OpenAI, have supplied to let artists choose out of getting their photographs used to coach future variations of the fashions. However artists say this isn’t sufficient. Eva Toorenent, an illustrator and artist who has used Glaze, says opt-out insurance policies require artists to leap by means of hoops and nonetheless go away tech firms with all the ability.

Toorenent hopes Nightshade will change the established order.

“It will make [AI companies] assume twice, as a result of they’ve the potential for destroying their total mannequin by taking our work with out our consent,” she says.

Autumn Beverly, one other artist, says instruments like Nightshade and Glaze have given her the arrogance to submit her work on-line once more. She beforehand eliminated it from the web after discovering it had been scraped with out her consent into the favored LAION picture database.

“I’m simply actually grateful that we’ve a device that may assist return the ability again to the artists for their very own work,” she says.